AIverse Design is a curated platform exploring how artificial intelligence intersects with design, creativity, and digital experiences.

The Missing Playbook for Designing AI Products

(A practical, human guide for builders who are tired of guessing)

Before we begin, here’s the rough skeleton we’ll follow — because structure matters, especially when we’re talking about AI systems:

Outline

- Why AI products feel different (and why old UX rules fall short)

- The 5-Part AI Design Pattern Framework

- AI Onboarding (Entry Touchpoints)

- User Input (Expressive Input + Expanding Context)

- AI Output (Processing + Explainability + Output Types)

- User Refinement (Correction & Iteration)

- System Learning (Memory + Feedback + Personalization)

- How do these pieces connect into a living system

- Common traps founders and teams fall into

- A closing note on responsibility and craft

Now let’s talk honestly.

Why AI Products Feel… Different

Designing AI products isn’t like designing a dashboard.

It isn’t like building a marketplace.

And it definitely isn’t like shipping a static SaaS tool.

AI products behave. They respond. They adapt. They surprise.

That’s both their magic and their danger.

Traditional UX patterns assume deterministic systems. Click a button, get a predictable result. AI systems don’t play by those rules. They generate. They infer. They sometimes hallucinate. And they always carry a layer of uncertainty.

This means your design can’t be solely about usability.

It has to be about setting expectations, calibrating trust, and fostering collaboration.

The PDF framework from AIVerse.Design outlines this beautifully. On page 2, the arc diagram shows the lifecycle clearly:

AI Onboarding → User Input → AI Output → User Refinement → System Learning

It’s not a funnel.

It’s a loop.

And that loop is your real product.

Part 1: AI Onboarding — First Impressions Matter More Than You Think

(Entry Touchpoints)

The first interaction with an AI system shapes everything that follows.

When users “form their first impression” and “learn how to begin.”

That sounds simple. It’s not.

Here’s the thing: AI creates ambiguity.

- What can this system actually do?

- Is it a collaborator or an oracle?

- Is it confident? Experimental? Risky?

If you don’t answer these questions immediately, users hesitate. Or worse — they assume too much.

What Smart AI Onboarding Does

The framework highlights patterns like:

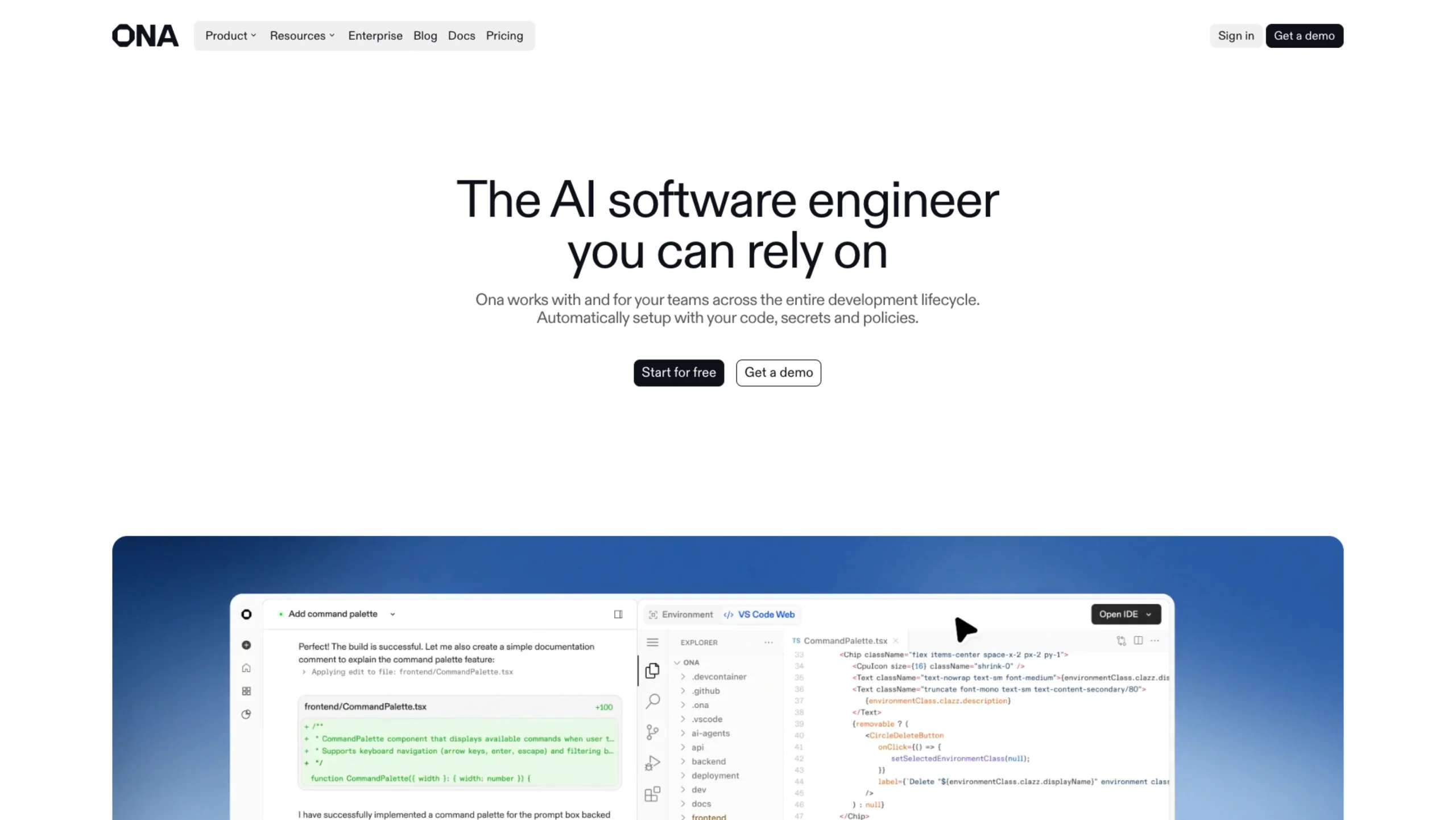

- Disclaimers (e.g., GitHub Copilot saying, “I can help, but I make mistakes.”)

- Suggested prompt cards

- Friendly icons or visual signals

- Search affordances and open input fields

Notice something? These aren’t flashy AI features.

They’re clarity mechanisms.

Good AI onboarding:

- Reduces prompt anxiety

- Signals capability without overpromising

- Shows how structured or free-form interaction should be

If users stare at a blank input field and think, “What am I supposed to type?” — you’ve already lost momentum.

AI products don’t need hype.

They need orientation.

Part 2: User Input — Designing How Humans Think

(Expressive Input + Expanding Context)

Most teams obsess over output quality.

Few obsess over input design.

That’s a mistake.

This framework explains that expressive input shapes how the AI “pays attention” to user intent. And that phrase — pays attention — is important.

Because users don’t just input text. They express intent.

Expressive Input: Beyond the Prompt Box

The framework gives examples like:

- Voice input (Grok)

- Gesture input (Humane AI Pin)

- Text selection tools

- Visual manipulation (moving elements in a 2×2 grid in Figma)

Why does this matter?

Because natural language isn’t always enough.

Creative tools, mobile interfaces, and multimodal systems benefit from richer input channels. When someone drags a slider to change tone instead of typing “make it more formal,” the system feels intuitive.

It reduces friction.

It reduces prompt fatigue.

And honestly, it reduces embarrassment for users who don’t know how to phrase things “correctly.”

Expanding Context — Giving the AI Better Ingredients

This framework outlines patterns for expanding context: file uploads, model selection, and knowledge base integration.

This is where AI moves from generic to useful.

Examples include:

- Uploading documents (Sana AI)

- Selecting chat history or model versions

- Filtering knowledge bases (Writer AI)

Let me say this clearly:

AI without context is noise.

But there’s a catch.

When you allow context expansion, you must answer:

- What does the AI have access to?

- Can users see it?

- Can they revoke it?

If context feels invisible, users feel exposed.

And once trust cracks, it’s hard to repair.

Part 3: AI Output — Trust Lives Here

This is where most teams focus. And yes, it matters. But output design isn’t just about formatting.

It’s about confidence, clarity, and perception.

This describes how output design shapes how results are “presented, explained, and trusted.”

Let’s break it down.

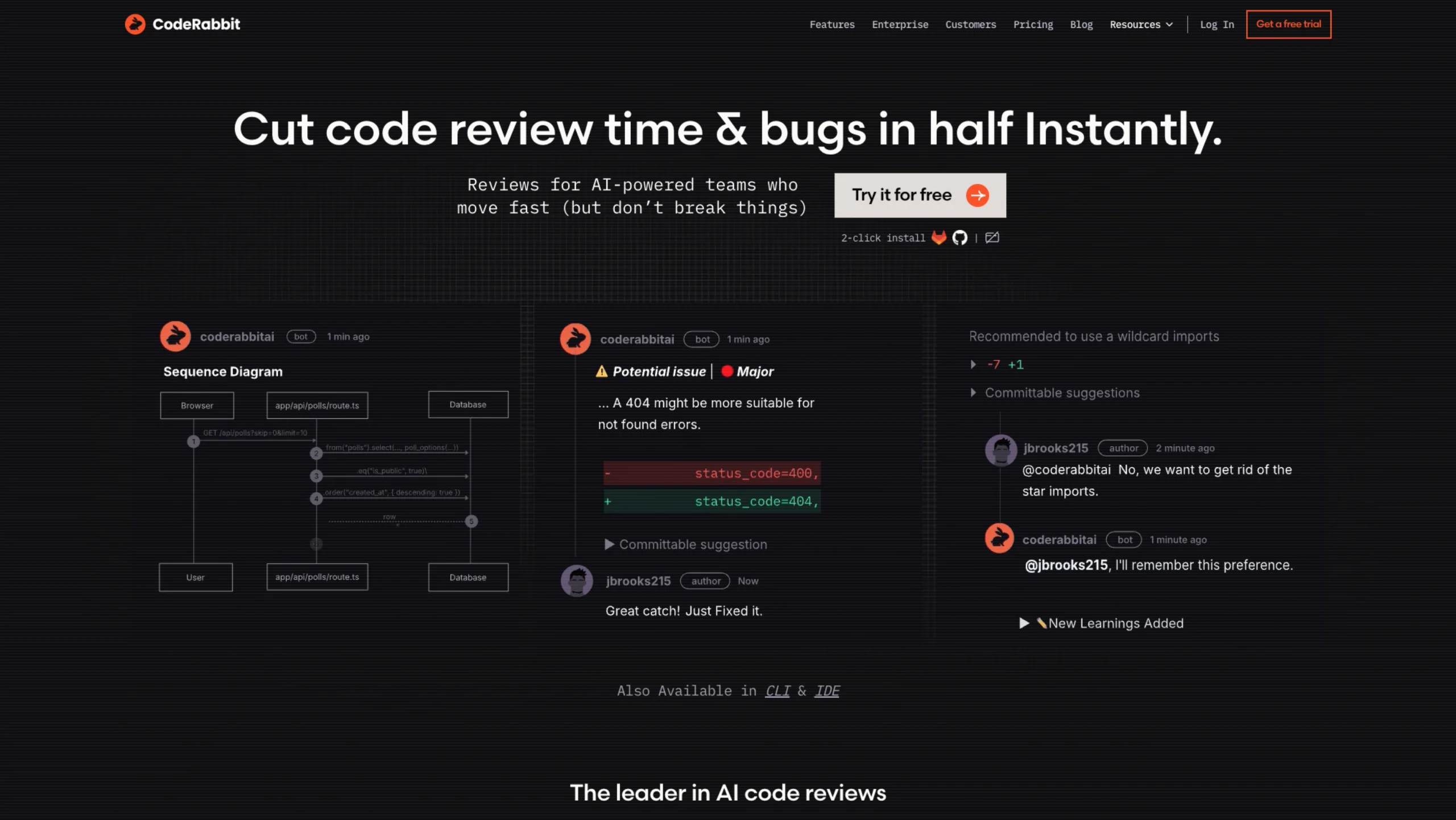

Processing — Don’t Leave Users in the Dark

AI systems perform invisible work: API calls, retrieval steps, and tool usage.

The framework asks:

Is it clear the system is working — not stalled?

Examples include:

- Streaming responses

- Parallel processing indicators

- Step-by-step in-progress states

A spinner alone isn’t enough.

Good processing design:

- Manages impatience

- Prevents abandonment

- Sets time expectations

If the system takes 10 seconds to think, explain why.

Otherwise, users assume it’s broken.

Explainability — Why Did I Get This Result?

The framework discusses explainability and confidence signals.

Three types of signals stand out:

- Textual — “Low confidence,” “Experimental,” numeric scores

- Visual — opacity changes, badges, color cues

- Structural — ordering results by reliability

This is crucial.

AI outputs often sound confident even when wrong.

Design must calibrate trust.

If everything looks equally polished, users can’t distinguish reliable from speculative.

And that’s dangerous.

Types of Outputs — Not Everything Should Be Text

Outlines output variations: text, images, video, audio, and structured diagrams.

Examples include:

- Midjourney grids

- Runway multimodal boards

- ChatGPT text output

- Miro text-to-diagram

The insight here?

Medium shapes meaning.

A chart can clarify what text cannot.

Audio can increase accessibility.

Visual grids encourage comparison.

Designers and founders often default to chat because it’s easy.

But sometimes chat is the wrong interface.

Part 4: User Refinement — Where AI Becomes a Partner

This describes correction & iteration.

This is where AI stops being a vending machine.

Most outputs are almost right.

Users want to:

- Adjust tone

- Fix accuracy

- Regenerate variations

- Edit inline

Examples include:

- “Reply” to refine chat

- Regenerate image variations

- Inline editing

- Visual diffs for code

Here’s a small truth:

If users must restart the entire prompt every time something is slightly off, they will burn out.

Refinement reduces friction.

It supports flow.

And it reinforces collaboration.

Without it, AI feels disposable.

With it, AI feels responsive.

Part 5: System Learning — The Long Game

This is the most sensitive layer.

And probably the most misunderstood.

This framework explains system learning as the evolution of memory, feedback, and personalization across sessions.

Let’s unpack this carefully.

Memory Management — The Thin Line Between Smart and Creepy

Page 21 focuses on memory management.

Key design questions:

- Is it clear what the system remembers?

- Can users edit or delete memory?

- Is it opt-in or opt-out?

Persistent memory makes systems feel intelligent.

But invisible memory feels invasive.

There’s a difference between:

“Hey, I remember you prefer concise answers.”

and

“I’ve been tracking your behavior silently.”

Control changes perception.

Transparency builds trust.

Collection Feedback — Tiny Signals, Big Impact

Lightweight feedback patterns are discussed: thumbs up/down, star ratings, and simple prompts.

Why is this powerful?

Because not every correction needs a paragraph.

Sometimes a simple 👎 is enough.

But feedback must:

- Be quick

- The signal was received

- Clarify whether it affects current output or future learning

Otherwise, it feels performative.

Personalization — Making AI Feel Human (Without Overstepping)

Highlights personalization patterns.

Examples:

- Spotify AI DJ

- Duolingo difficulty adjustment

- Midjourney style preferences

- Perplexity topic tracking

Personalization solves the “one-size-fits-all” problem.

But it raises questions:

- How visible should it be?

- When should users steer, and when should the system adapt silently?

Too much automation removes agency.

Too little makes the system feel generic.

The balance is delicate.

And it requires design judgment, not just data.

How It All Connects — The Loop, Not the Feature

The most important takeaway from the framework’s arc diagram is this:

AI products are not linear flows.

They are loops.

Onboarding shapes input.

Input shapes output.

Output enables refinement.

Refinement informs learning.

Learning reshapes onboarding.

And around it goes.

If one stage is weak, the loop breaks.

Common Mistakes Teams Make

Let’s be honest. We’ve all seen these.

- Overinvesting in model performance, underinvesting in onboarding clarity

- Hiding memory features deep in settings

- Treating explainability as optional

- Shipping chat-first interfaces for everything

- Ignoring refinement because “regenerate” feels enough

AI products fail less often due to poor models.

They fail because of poor expectation design.

A Final Thought — Designing With Humility

AI systems are powerful.

They can write, generate, analyze, and synthesize.

But they’re probabilistic machines.

Design is what makes them usable.

Design is what makes them safe.

Design is what makes them trustworthy.

The missing playbook isn’t about flashy interfaces.

It’s about:

- Clear onboarding

- Thoughtful input systems

- Transparent output

- Seamless refinement

- Ethical system learning

When you build AI products, you’re not just designing screens.

You’re designing a relationship.

And relationships — whether human or machine — run on clarity, consent, feedback, and trust.

Get those right, and the rest follows.